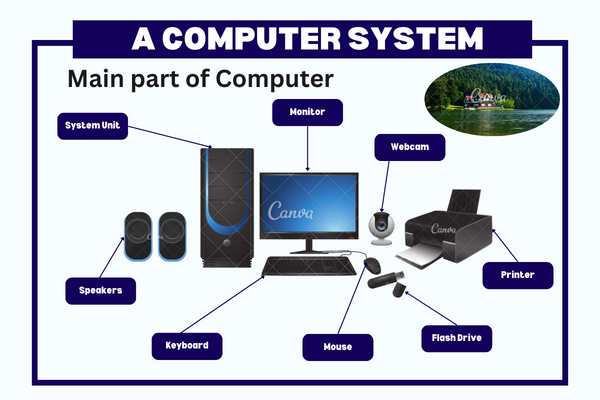

What is a Computer: A computer is an electronic device made by humans that provides information by taking input and processing it following our instructions. In very simple words, there is any device in the world that takes input, processes, and gives output.it is called the computer.

Meaning of Computer__

If seen from people’s perspective, most people think that a computer means either a desktop computer or a laptop computer. Many times, things which we do not consider as computers are also a type of computer. Such as calculators, microwaves, televisions, and digital cameras. These can be of many types. A calculator is also a type of electronic device in which input is given, it is processed and then output is given.

Where is a computer used?

The most used thing in the 21st century is the computer. Use it for specific digital information, and know all the connections to the internet, from the social media we use every day. With their help, the world’s biggest calculations can be solved easily without spending much time. Laptop computers and desktop computers- These are the types of general computers. With its help, we can do many types of work. With their help, we can learn all the languages like photo editing, video editing, and coding programming language. And we can also do such work. We cannot even think like this. All these technical computers are called general-purpose computers.

important uses of computer:-

- Business

- Manufacturing

- Banking

- Education

- Sports

- Science & Research

- Health care

- Graphic design and making the Painting

- photo and video editing

- Communication & law Enforcement by Police

Some Important Features of Computer:-

- Speed

- Accuracy

- Versatility

- Reliability

- storage Capability

- Diligence

- Limitation

- Multitasking

Types of Computer

Computers are divided into three types based on their data handling efficiency.

- Analog computer

- Digital computer

- Hybrid computer

Analog Computer:-Thus computers are designed to process analog data. A type of device that contains constantly changing physical quantities,In other words, these are computers that measure physical quantities. Their memory is very short and limited. And the speed of this computer was reduced a lot. for example- thermometers, Analog watches, voltmeters, speedometers, seismographs, flight, simulators, etc…

Digital computer:- This is a computer that runs very fast. It accepts data as input and returns information from binary numbers (0,1). for example- personal computers, desktops, laptops smartphones, and mobile, etc…

Hybrid computer: A hybrid computer is one such computer. Which includes the functions of analog and digital computers. It is related to computer systems, both digital machines and analog machines work together. for example- Ultrasound Machines, ECG machines, Dialysis machines, CT Scan machines, Automated Teller machines (ATM), etc..

History of Computer:-

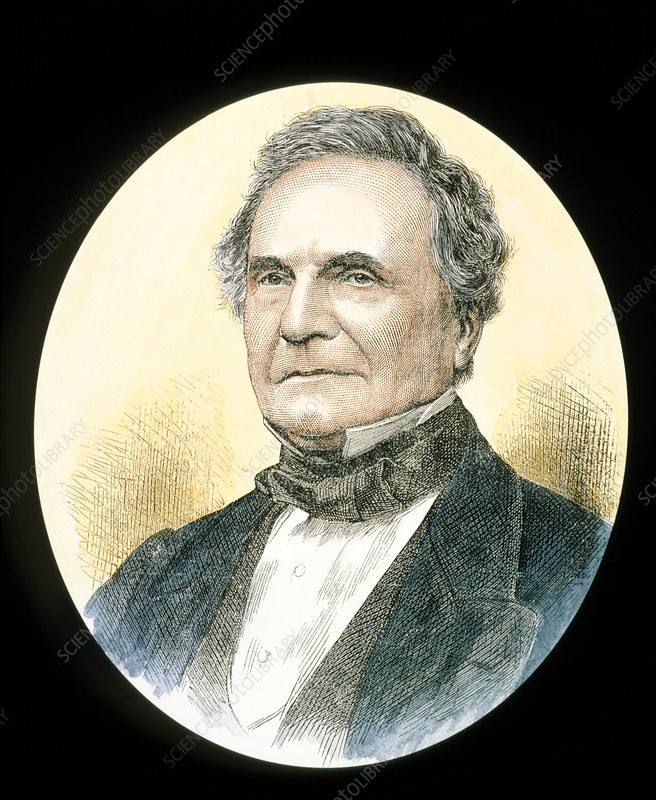

If we talk about the history of computers, many scientists developed various types of computerization. But at that time there were many types of defaults. Then after that, a great scientist came who changed the functionality of the computer. Who solved the problems faced by many types of computers? For this reason, we know him as the father of computers.

In the early 1820s, Charles Babbage created the Difference Engine. It was a mechanical computer that could do basic computations. It was a steam-powered calculating machine used to solve numerical tables such as logarithmic tables. Charles Babbage created another calculating machine, the Analytical Engine, in 1830.

Introduction

The history of computers is a fascinating journey that spans centuries, marked by innovation, creativity, and the relentless pursuit of knowledge. From the abacus to the powerful machines of the modern era, the evolution of computers has shaped and defined human progress. In this exploration of the history of computers, we will embark on a chronological voyage, uncovering the key milestones and breakthroughs that have led to the digital landscape we navigate today.

Ancient Beginnings: The Abacus and Analog Devices

The roots of computer history can be traced back to ancient civilizations, where rudimentary counting devices like the abacus were employed. The abacus, with its beads and wires, allowed early mathematicians to perform basic arithmetic calculations. While these devices were not “computers” in the modern sense, they laid the foundation for the numerical concepts that would become integral to computational systems.

Moving forward, analog computing devices emerged during the Renaissance, such as the slide rule and the astrolabe. These instruments enabled more sophisticated calculations, particularly in navigation, astronomy, and mathematics. Analog devices operated on continuous data and provided a glimpse into the potential for automated computation.

The Mechanical Era: Babbage and the Analytical Engine

The 19th century marked a pivotal moment in the history of computers with the visionary work of Charles Babbage. Babbage conceptualized the Analytical Engine, a mechanical device designed to perform general-purpose computations through the use of punched cards and a memory unit. Though the Analytical Engine was never fully realized during Babbage’s lifetime due to technological limitations, it laid the groundwork for future computing concepts.

Ada Lovelace, an insightful mathematician and collaborator with Babbage, is credited with writing the world’s first programming instructions for the Analytical Engine. Her visionary ideas went beyond mere numerical calculations, foreseeing the potential for computers to process symbols and create music, paving the way for the concept of computer programming.

The Birth of Electronic Computers

The 20th century we have witnessed a seismic shift in computer history with the advent of electronic computers. The Electronic Numerical Integrator and Computer (ENIAC), completed in 1945, is often considered the first electronic general-purpose computer. ENIAC was a colossal machine, occupying an entire room and comprised of thousands of vacuum tubes.

Following ENIAC, other landmark machines emerged, such as the Universal Automatic Computer (UNIVAC), which made history as the first commercially produced computer. These machines marked a departure from the mechanical era, utilizing electronic components for faster and more reliable computation. The development of transistors in the 1950s further revolutionized computing by reducing the size and power consumption of computers.

The Computer Revolution: Rise of Mainframes and Minicomputers

The 1950s and 1960s witnessed the rise of mainframe computers, powerful machines that could process vast amounts of data for large organizations. IBM’s System/360, introduced in 1964, was a groundbreaking mainframe series that standardized computer architecture, allowing compatibility between different models. Mainframes became the backbone of industries such as banking, government, and scientific research.

Simultaneously, the concept of minicomputers emerged, offering smaller and more affordable computing solutions. Pioneering companies like DEC (Digital Equipment Corporation) played a crucial role in popularizing minicomputers, making computing resources accessible to a wider range of businesses and researchers.

The Microprocessor Revolution: Birth of Personal Computers

The 1970s witnessed a significant shift with the development of microprocessors, compact integrated circuits that contained the essential components of a central processing unit (CPU). This innovation paved the way for the era of personal computing. In 1974, the Altair 8800, the first commercially successful personal computer, was released as a build-it-yourself kit. This prompted the founding of Microsoft by Bill Gates and Paul Allen in 1975, marking the beginning of the software industry.

An image of Difference Engine

The late 1970s and early 1980s saw the introduction of iconic personal computers like the Apple II and IBM PC. These machines, equipped with microprocessors, brought computing power to homes and small businesses, transforming how people worked and interacted with technology. The graphical user interface (GUI), introduced by Apple’s Macintosh in 1984, further enhanced user-friendliness, making computers accessible to a broader audience.

The Internet Age: Connecting the World

The 1990s ushered in the era of the internet, a transformative development that forever changed the landscape of computing. Tim Berners-Lee’s creation of the World Wide Web in 1989 laid the foundation for a global network of interconnected computers. The internet, on an unprecedented scale, has enabled the seamless exchange of information, fostered communication, and facilitated collaboration among individuals and entities worldwide.

Personal computers evolved into multimedia machines capable of handling graphics, audio, and video, opening new possibilities for entertainment, education, and business. The dot-com boom in the late 1990s saw the rise of internet-based businesses, solidifying the internet’s role as a catalyst for economic and social change.

Mobile Computing and the 21st Century

The 21st century witnessed the proliferation of mobile computing, with the advent of smartphones and tablets. These portable devices, equipped with powerful processors and high-speed internet connectivity, became ubiquitous tools for communication, entertainment, and productivity. The development of mobile apps created new avenues for software innovation, transforming the way people access information and services.

Cloud computing emerged as a paradigm shift, enabling users to access and store data remotely. This technology not only enhanced collaboration and data management but also reduced the reliance on physical hardware. Virtualization, artificial intelligence, and machine learning further advanced the capabilities of computers, ushering in a new era of automation and intelligent systems.

Conclusion

The history of computers is a testament to human ingenuity and the relentless pursuit of knowledge. From the humble abacus to the powerful computers of the 21st century, each milestone in computer history has shaped the way we live, work, and connect with the world. As we stand on the shoulders of giants, it is essential to recognize the collective effort of innovators, engineers, and visionaries who have propelled us into the digital age. The journey is far from over, and the history of computers continues to unfold, promising new horizons and possibilities for the generations to come.